In this paper, law professor Lynn Lopucki ponders the question: What happens if you turn over control of a corporate entity to an AI?

Pretty terrible stuff. Odds are high you'd see them emerge first in criminal enterprises, as ways of setting up entities that engage in nefarious activities but cannot be meaningfully punished (in human terms, anyway), even if they're caught, he argues. Given their corporate personhood in the US, they'd enjoy the rights to own property, to enter into contracts, to legal counsel, to free speech, and to buy politicians -- so they could wreak a lot of havoc.

The prospect of AI running firms and exploiting legal loopholes has been explored in cyberpunk sci-fi, so it's mesmerizing to watch the world of real-world law start to grapple with this. It's coming on the tails of various thinkers pointing out that Silicon Valley's fears of killer AI are predicated on the idea that AIs would act in precisely the way today's corporations do: i.e. that they'd be remorselessly devoted to their self-interest, immortal and immoral, and regard humans as mere gut-flora -- to use Cory's useful metaphor -- towards pursuance of their continued existence. Or to put it another way, corporations already evince much of the terrifying behavior LoPucki predicts we'll see from algorithmic entities; it's not clear that any world government is willing to bring to justice any of the humans putatively in control of today's crimedoing firms, so even the moral immunity you'd see in AIs is basically already in place.

Lopuci assumes that AI capable of running a corporate entity will likely emerge -- rather quickly if you believe AI doubters (though naively-slowly if you believe others) -- by the 2070s. As he points out ...

AEs are inevitable because they have three advantages over human-controlled businesses. They can act and react more quickly, they don't lose accumulated knowledge through personnel changes, and they cannot be held responsible for their wrongdoing in any meaningful way.

AEs constitute a threat to humanity because the only limits on their conduct are the limits the least restrictive human creator imposes. As the science advances, algorithms' abilities will improve until they far exceed those of humans. What remains to be determined is whether humans will be successful in imposing controls before the opportunity to do so has passed.

This Article has addressed a previously unexplored aspect of the artificial-intelligence-control problem. Giving algorithms control of entities endows algorithms with legal rights and gives them the ability to transact anonymously with humans. Once granted, those rights and abilities would be difficult to revoke. Under current law, algorithms could inhabit entities of most types and nationalities. They could move from one type or nationality to another, thereby changing their governing law. They could easily hide from regulators in a system where the controllers of non-publicly-traded entities are all invisible. Because the revocation of AEs' rights and abilities would require the amendment of thousands of entity laws, the entity system is less likely to function as a means of controlling artificial intelligence than as a means by which artificial intelligence will control humans.

LoPucki's white paper became a top hit on the SSRN site last week, apparently -- as he notes in this SSRN blog post -- because anti-terrorism people are super flipped out about it:

One of the scariest parts of this project is that the flurry of SSRN downloads that put this manuscript at the top last week apparently came through the SSRN Combating Terrorism eJournal. That the experts on combating terrorism are interested in my manuscript seems to me to warrant concern.

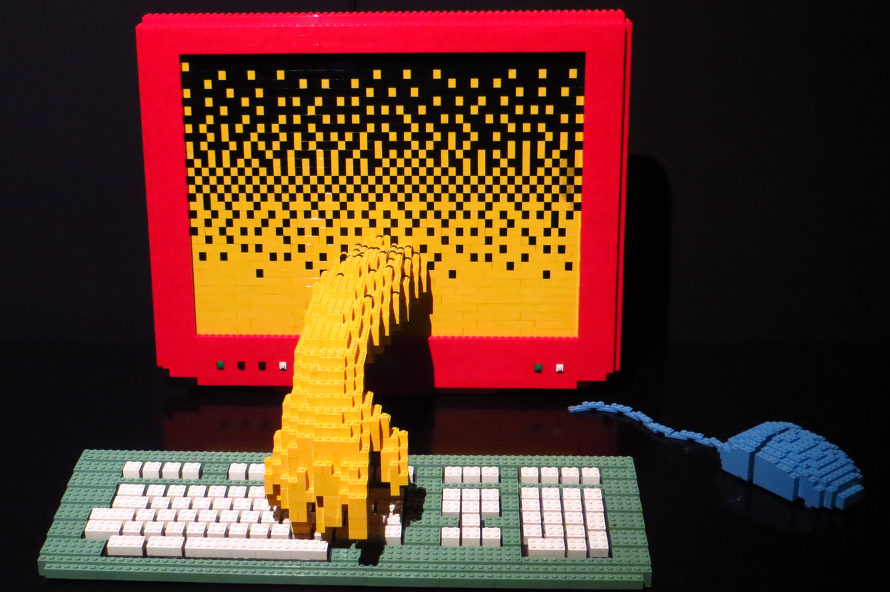

(CC-licensed photo via Howard Lake)